Artificial Intelligence (AI) has moved from futuristic promise to everyday reality. From customer service chatbots and intelligent search assistants to tools that write, summarize, and even design — AI is now woven into how people work, learn, and communicate. At the center of this transformation lies one of the most powerful innovations in modern computing: the Large Language Model (LLM).

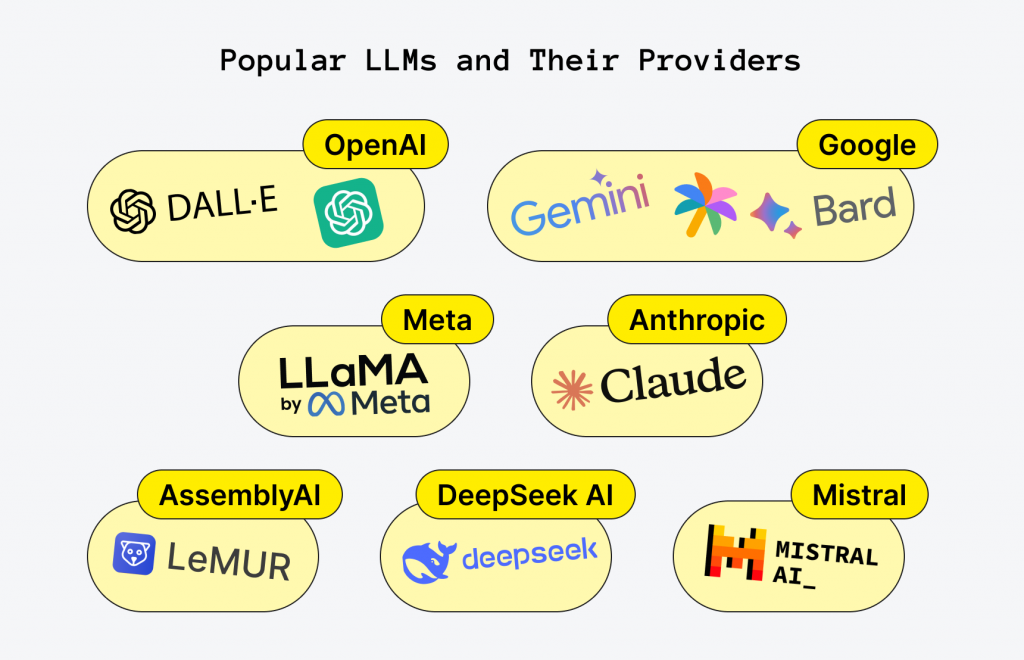

A Large Language Model is an advanced type of AI trained on massive datasets of text — books, websites, research papers, and code — to understand and generate human language. These models power popular systems such as ChatGPT, Google Gemini, and Anthropic’s Claude, enabling them to answer questions, draft content, analyze data, and interact naturally with users.

By 2025, LLMs have become the backbone of generative AI systems driving global business adoption. According to IBM, LLMs represent a major shift from traditional AI because they can interpret context, learn from data, and produce coherent human-like responses across industries.

This article breaks down everything you need to know about Large Language Models — what they are, how they work, where they’re used, and why they’re reshaping the future of business and technology. You’ll also learn about their training process, benefits, limitations, and the direction LLMs are heading next.

What Are LLMs?

A Large Language Model (LLM) is a type of artificial intelligence trained to understand, generate, and process human language. The term “large” refers to the enormous amount of data and parameters these models use—ranging from billions to trillions—while “language model” describes their primary function: predicting and generating words based on context.

In simple terms, an LLM learns by analyzing patterns in vast text datasets such as books, research papers, articles, websites, and even code. Through this exposure, it identifies how words, phrases, and ideas connect—allowing it to respond in ways that feel natural and contextually relevant.

For example, when you ask ChatGPT or Google Gemini a question, the model doesn’t “know” facts the way humans do. Instead, it predicts the most probable next words based on its training data, forming coherent and meaningful responses.

LLMs are built using deep learning techniques—particularly Transformer architectures—that help them process entire sequences of text simultaneously rather than word by word. This allows them to understand relationships between words across long passages, which is why they can summarize, translate, and write with such fluency.

According to IBM, modern LLMs are capable of performing multiple language-related tasks such as summarization, reasoning, code generation, and sentiment analysis—all without task-specific programming. Similarly, Cloudflare describes LLMs as the “foundation of generative AI,” powering the systems that enable machines to interact with humans through natural language.

In essence, a Large Language Model acts as the linguistic engine behind generative AI. It transforms raw data into meaningful language, bridging the gap between human intent and machine understanding.

How Are Large Language Models and AI Related?

LLMs are a subset of artificial intelligence, specifically within the field of natural language processing (NLP). While AI encompasses everything from image recognition to robotics, LLMs focus on language understanding and generation.

Here’s the relationship in a nutshell:

So, while all LLMs are AI, not all AI systems are LLMs. LLMs are the linguistic brains of the AI family.

Applications of Large Language Models

The versatility of LLMs is what makes them revolutionary. Here are some of their most impactful applications across industries:

- Customer Service: LLM-powered chatbots and virtual assistants provide 24/7 customer support, handle common queries, and even analyze customer sentiment to provide more effective responses.

- Content Generation: Businesses use LLMs to create high-quality content, including blog posts, articles, social media captions, and product descriptions, which saves time and resources.

- Data Analysis & Insights: LLMs can process vast amounts of unstructured data—such as customer feedback, social media posts, and internal communications—to extract valuable insights, analyze sentiment, and support business intelligence.

- Recruitment and HR: LLMs automate tasks like resume screening, candidate sourcing, and virtual interviews, helping to identify suitable candidates more efficiently and reduce unconscious bias.

- Code Generation: LLMs can generate code snippets, refactor existing code, and assist with debugging, significantly accelerating software development cycles.

- Fraud Detection: In finance and other sectors, LLMs analyze transaction data in real time to detect suspicious patterns and prevent fraudulent activities. Mastercard, for example, uses LLMs for this purpose.

- Supply Chain Management: LLMs are transforming supply chain operations by improving demand forecasting, managing inventory, and optimizing logistics. They can analyze vast amounts of data—including historical sales, weather patterns, and market trends—to predict demand shifts and proactively manage risks.

- Legal and Medical Fields: In highly specialized fields, LLMs serve as powerful assistants for professionals.

- Legal: LLMs can draft legal documents and contracts, summarize complex case law, and perform e-discovery by quickly sifting through thousands of documents. They can assist lawyers in building stronger arguments by identifying relevant precedents and statutes.

- Medical: LLMs are being used for clinical decision support, helping physicians with initial and differential diagnoses by analyzing patient data and medical literature. They can also automate administrative tasks, such as summarizing patient records and generating clinical documentation, which reduces the burden on healthcare staff and allows them to focus more on patient care.

- Education and Training: The education sector is leveraging LLMs to create personalized and efficient learning experiences.

- Personalized Learning: LLMs can act as personalized tutors, providing customized learning plans and real-time feedback based on a student’s individual needs, learning style, and performance.

- Content and Assessment: They can generate educational content like quizzes and study guides and even automate the grading process, providing instant feedback to students and saving educators time.

- Accessibility: LLMs can simplify complex texts and translate learning materials into multiple languages, making education more accessible and inclusive.

- Marketing and Advertising: LLMs help businesses create more targeted and effective marketing campaigns. By analyzing customer data, LLMs can identify trends and preferences, which enables the creation of highly personalized advertisements and product recommendations. They also automate the generation of marketing copy for emails, social media, and landing pages, ensuring content is tailored to specific audience segments.

Expert Insight:

“LLMs are now the connective tissue of modern business intelligence. Their adaptability allows every function—from marketing to compliance—to benefit from AI without retraining models from scratch.”

— Dr. Rucha Joshi, Senior Research Scientist, IBM AI Labs

LLM Market Overview

The Large Language Model (LLM) market is witnessing explosive growth, fueled by the rising adoption of AI-powered automation across sectors such as technology, retail, healthcare, and finance. Organizations are increasingly relying on LLMs to improve communication, streamline workflows, and enhance decision-making—driving unprecedented market expansion.

Key Market Insights

- Market Size: The global LLM market was valued at approximately $6.02 billion in 2024, underscoring the rapid commercialization of AI-driven tools and applications.

- Projected Growth: Analysts predict remarkable expansion, with the market expected to reach $36.1 billion by 2030 and potentially $84.25 billion by 2033. This translates to a Compound Annual Growth Rate (CAGR) exceeding 33%, making it one of the fastest-growing segments in the AI industry.

- Regional Dominance: North America currently leads the global LLM market. The region’s dominance is driven by advanced technological infrastructure, early enterprise adoption, and the strong presence of AI leaders such as OpenAI, Google, Microsoft, and Anthropic.

- Leading Segments: In 2024, chatbots and virtual assistants accounted for over 26% of market revenue, highlighting how conversational AI is becoming central to digital transformation. Among end-user industries, the retail and e-commerce sector emerged as the largest contributor, leveraging LLMs for customer engagement, recommendation systems, and personalized shopping experiences.

- Model Size Trends: LLMs with 10 billion to 50 billion parameters are gaining popularity. These models strike the right balance between high linguistic performance and manageable computational costs—making them ideal for enterprise use cases without requiring massive infrastructure investments.

The market’s rapid evolution reflects how organizations are shifting from exploring AI to embedding it directly into operational and customer-facing systems.

LLM Adoption and Usage

The adoption of Large Language Models is accelerating at a record pace, transitioning from experimental pilots to mainstream enterprise integration. Businesses now view LLMs not as optional tools but as essential drivers of productivity, creativity, and efficiency.

Key Adoption Trends

- Enterprise Adoption: According to McKinsey, nearly 78% of organizations now use AI in at least one business function, while 71% of enterprises report active integration of generative AI—a clear indicator that LLMs are becoming foundational to corporate strategy.

- Daily Usage: Studies show that 83% of professionals use AI tools at least weekly for work, and about 37.3% interact with AI chatbots daily, underscoring their growing role in daily workflows and problem-solving.

- Impact on Productivity: Research confirms that LLMs meaningfully improve efficiency. One study found that using ChatGPT reduced the time required for writing tasks by 37% and improved overall output quality by 18%, showcasing how AI can enhance both speed and precision.

- Work Quality and Confidence: Nearly 88% of professionals believe that AI tools and LLMs improve the quality of their work, helping them generate better ideas, communicate more effectively, and reduce routine workload stress.

- Spending and Investment: The financial commitment toward LLMs is also rising. Enterprises currently invest an average of $1.9 million in generative AI initiatives, and by 2025, an estimated 72% of organizations plan to increase their LLM budgets to expand capabilities, improve accuracy, and integrate custom models into existing systems.

Note: I believe AI is going to change the world more than anything in the history of humanity. More than electricity.” — Kai-Fu Lee, AI Expert and Author

How Does an LLM Work?

At their core, Large Language Models (LLMs) are highly sophisticated “next-word prediction” engines. Their remarkable ability to generate coherent, context-aware, and human-like text stems from a complex, multi-layered process rooted in deep learning and a groundbreaking architecture known as the Transformer.

1. The Foundational Principle: Statistical Prediction

LLMs operate on a fundamental principle: estimating the probability of a token (a word, part of a word, or even a punctuation mark) appearing in a sequence. When you give an LLM a prompt, it doesn’t “understand” the meaning in a human sense. Instead, it breaks down your input and uses its vast knowledge base to calculate the most statistically probable next token to generate.

Consider the simple phrase, “The cat sat on the _____.” Based on its training data, an LLM knows that words like “mat,” “rug,” or “floor” are highly probable to follow. It assigns a probability to each word in its vocabulary and selects the one with the highest likelihood. This process is repeated sequentially, token by token, to form a complete response, paragraph, or even an entire article.

2. The Engine: Deep Learning and Neural Networks

LLMs are a form of deep learning, a subset of machine learning that uses multi-layered neural networks. Just as the human brain’s neurons connect and send signals, an artificial neural network consists of interconnected nodes organized into layers.

- Input Layer: Receives the initial data (your prompt).

- Hidden Layers: These are the “deep” part of the network. LLMs have multiple hidden layers where the model processes and transforms the input data to identify complex patterns and relationships. Each node in a layer has a “weight” and a “bias,” which are adjusted during training to minimize prediction errors.

- Output Layer: Produces the final prediction, which is a probability distribution over the model’s entire vocabulary.

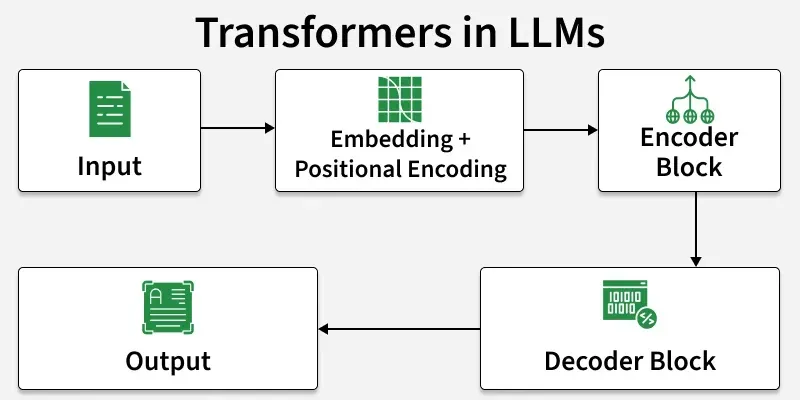

3. The Architecture: The Transformer Model

The real breakthrough that enabled LLMs was the invention of the Transformer architecture in 2017. Before Transformers, most language models (like Recurrent Neural Networks or RNNs) processed text sequentially, one word at a time. This made it difficult for them to remember and reference information from earlier in a long sentence or document.

The Transformer architecture changed this by introducing a core innovation: the self-attention mechanism.

- Self-Attention: This mechanism allows the model to weigh the importance of every word in an input sequence in relation to every other word. When the model processes a word, it doesn’t just look at the words immediately before it; it can look at the entire context of the sentence or paragraph. For example, in the sentence “The city’s famous river is a beautiful place, and it flows quickly,” the self-attention mechanism helps the model understand that “it” refers to “the river.” This parallel processing capability is what allows LLMs to handle vast amounts of text and understand long-range dependencies, something older models struggled with.

The Transformer model typically consists of two main parts, which may vary depending on the specific LLM:

- Encoder: Processes the input text, converting it into a numerical representation that captures its meaning and context.

- Decoder: Takes this numerical representation and generates the output text, one token at a time.

How Are LLMs Trained?

LLMs are trained in a multi-stage process that builds upon a foundation of broad language understanding and refines it for specific tasks and behaviors. This process can be broken down into three main phases: pre-training, fine-tuning, and reinforcement learning from human feedback (RLHF).

1. Pre-training

This is the initial and most resource-intensive phase. The goal is to give the model a broad, foundational understanding of language, grammar, facts, and reasoning.

- Massive Dataset: The model is trained on a massive, diverse, and raw dataset, often comprising trillions of words from sources like books, articles, websites (e.g., Common Crawl), and code. This is an unsupervised learning process, meaning the data isn’t labeled with explicit instructions.

- The Task: The primary task during pre-training is “next-token prediction.” The model is given a sequence of text and learns to predict the next word or token. For example, if the input is “The cat sat on the…”, the model’s job is to predict the word “mat,” “rug,” or another highly probable word.

- Outcome: Through this process, the model learns the statistical relationships between words and phrases. It builds a vast internal representation of language and knowledge, without any specific task in mind.

2. Fine-tuning

After pre-training, the model is a generalist with a massive amount of knowledge, but it doesn’t know how to follow specific instructions or perform particular tasks. Fine-tuning solves this.

- Supervised Learning: This phase uses a smaller, high-quality, labeled dataset of prompt-response pairs. For example, a pair might look like: Prompt: “Summarize this article.” Response: “[A human-written summary].”

- The Task: The model is explicitly trained to follow instructions, generate specific types of content, or adapt to a particular domain. This is often called instruction tuning. It learns to map a given input prompt to a desired output format.

- Outcome: Fine-tuning transforms the LLM from a simple next-word predictor into a capable assistant that can follow commands. It’s what makes the difference between a raw language model and a useful chatbot. This phase is less computationally expensive than pre-training.

3. Reinforcement Learning from Human Feedback (RLHF)

This is the final, crucial step for aligning the model with human values and preferences. It’s how models are made to be more helpful, honest, and harmless.

- Human Feedback: The process involves human reviewers who rank multiple responses generated by the model for a given prompt. They provide feedback on which responses are better, based on factors like helpfulness, accuracy, tone, and safety.

- The Reward Model: The human rankings are used to train a separate “reward model.” This model learns to predict human preferences and assign a numerical score to a model’s response.

- Reinforcement Learning: The LLM is then fine-tuned again, but this time it is guided by the reward model. The LLM’s goal is to generate responses that maximize the “reward” score assigned by the reward model. This continuous feedback loop reinforces good behaviors and discourages unwanted ones like “hallucinations” or biased language.

How Does LLM Help in Businesses?

Large Language Models (LLMs) are transforming how organizations operate, innovate, and serve customers. By turning data into insights and automating communication-heavy tasks, they’re helping businesses work faster, smarter, and more efficiently.

1. Enhanced Productivity

LLMs automate routine tasks such as drafting emails, summarizing meetings, generating reports, and managing customer queries. This allows employees to focus on higher-value work like innovation and strategy.

For example, marketing teams can instantly draft ad copy, while HR departments can quickly summarize resumes or policies—saving hours of manual effort.

2. Improved Decision-Making

LLMs analyze unstructured data—reviews, reports, surveys—and extract key insights to support data-driven decisions. Executives can get quick summaries of sales trends or recurring customer issues, enabling faster, more informed responses.

This helps leaders move from reactive decision-making to proactive strategy.

3. Personalization at Scale

LLMs help deliver customized experiences by understanding customer intent and behavior.

E-commerce and media companies use them to recommend products or content tailored to individual users, increasing engagement and loyalty. With LLMs, personalization that once required large teams is now automated and scalable.

4. Cost Efficiency

Automating repetitive communication, documentation, and customer service tasks reduces labor costs and increases efficiency. Businesses can scale support operations, handle large volumes of requests, and maintain quality without expanding staff—saving both time and money.

5. Competitive Advantage

Early adopters of LLMs enjoy greater agility, faster innovation, and stronger customer engagement. They can adapt quickly to market changes, test new ideas rapidly, and bring products to market sooner—gaining a lasting competitive edge.

Benefits of Large Language Models

Large Language Models (LLMs) are redefining how people and businesses communicate, create, and operate. Below are their most impactful advantages:

1. Human-Like Communication

LLMs understand context, tone, and intent—allowing them to converse naturally and adapt their style for different audiences. Whether answering customer queries or drafting business emails, they make machine interaction feel human and intuitive.

2. Multilingual Capabilities

Modern LLMs can read, translate, and write in multiple languages, enabling global communication without language barriers. Businesses can now offer consistent multilingual support and marketing at scale.

3. Creativity & Ideation

LLMs act as creative partners—helping generate ideas, headlines, scripts, and product concepts. By sparking fresh perspectives, they boost creativity across marketing, content, and design teams.

4. Automation of Routine Tasks

They streamline repetitive communication tasks such as drafting emails, summarizing reports, or creating FAQs—freeing teams to focus on strategy and innovation while reducing time and labor costs.

5. Data Interpretation and Summarization

LLMs can digest and explain complex data, from technical papers to analytics dashboards. This turns dense information into clear, actionable insights for decision-makers and non-technical users alike.

6. Accessibility for All Users

By allowing natural language interactions, LLMs make advanced tools usable for everyone—no coding or technical expertise required. Small businesses and individuals can leverage AI for marketing, research, and automation with ease.

7. Continuous Learning and Adaptability

With ongoing updates and human feedback, LLMs evolve to deliver more accurate and relevant results over time—making them sustainable, future-ready tools for long-term use.

8. Human-AI Collaboration

Ultimately, LLMs enhance rather than replace human intelligence. They work as collaborative partners—amplifying creativity, productivity, and innovation across industries.

Challenges of Large Language Models

Despite their incredible potential, Large Language Models are not without limitations. As businesses and developers increasingly rely on LLMs for automation and decision-making, it’s essential to understand the challenges that come with their use.

1. Data Bias

LLMs learn from vast datasets collected from the internet, books, and other public sources. However, this data often contains social, cultural, and demographic biases—and the model can unknowingly reproduce them. For example, biased training data might lead to stereotypes in job recommendations or unequal representation in language translation.

To counter this, developers must apply bias mitigation techniques and use more diverse, carefully curated datasets to ensure fairness and inclusivity.

2. Hallucinations (Incorrect or Fabricated Outputs)

One of the most well-known limitations of LLMs is their tendency to “hallucinate”—that is, to generate plausible-sounding but false information. Because LLMs predict words based on patterns rather than verifying facts, they can sometimes produce inaccurate statements, incorrect citations, or fictional details.

This poses risks in fields like healthcare, law, or journalism, where accuracy is non-negotiable. Ongoing research and fact-verification mechanisms are helping reduce these hallucinations, but they remain a key area of concern.

3. High Resource and Energy Costs

Training an LLM from scratch demands massive computing infrastructure, power, and time.

For instance, models like GPT-4 or Gemini require thousands of GPUs running for weeks or months, consuming immense amounts of electricity.

This makes LLM development expensive and raises environmental concerns about carbon footprint and energy efficiency.

To address this, organizations are exploring smaller, optimized models and parameter-efficient fine-tuning methods to balance performance with sustainability.

4. Data Privacy and Security

Because LLMs learn from public text, there’s a risk that sensitive or copyrighted material gets unintentionally included in the training data.

If mishandled, this could lead to privacy violations or intellectual property concerns.

Enterprises using LLMs must implement data anonymization, secure training pipelines, and compliance with privacy regulations like GDPR and HIPAA to safeguard user information.

5. Explainability and Transparency

LLMs are often described as “black boxes” because it’s difficult to understand how they arrive at specific outputs.

This lack of explainability makes it challenging to trust AI decisions—especially in regulated sectors like finance, healthcare, and government.

AI researchers are now developing interpretability tools that visualize decision pathways or highlight which inputs most influenced an output, promoting greater transparency and accountability.

6. Ethical and Regulatory Concerns

- As LLMs become more widespread, concerns around misuse, misinformation, and ethical responsibility are growing.

- Deepfakes, automated propaganda, and AI-generated spam can all emerge from poorly regulated models.

- Governments and tech leaders are working toward global AI governance frameworks that balance innovation with safety, ensuring these models are developed responsibly.

What Is the Future of LLMs?

The future of Large Language Models is both promising and transformative, signaling a new era of intelligent collaboration between humans and machines. As innovation accelerates, we’re witnessing LLMs evolve beyond text-based assistance into adaptive, multimodal systems that reshape industries, creativity, and everyday work.

We’re moving toward models that are:

1. Smaller Yet More Efficient

The next generation of LLMs will focus on optimization rather than size. Through techniques like quantization, pruning, and edge computing, developers are building lightweight, energy-efficient models that can run directly on personal devices such as smartphones or laptops.

This shift will make AI more accessible, secure, and private—eliminating the constant need for cloud connectivity while reducing costs and latency.

2. Multimodal and Context-Aware

Future LLMs will go beyond language, seamlessly integrating text, images, video, and audio into a single intelligent system. Imagine an AI that can read a business report, interpret an accompanying chart, and summarize a video presentation—all in one workflow.

These multimodal models will enhance creative industries, education, design, and data visualization by making AI understanding as holistic as human perception.

3. Domain-Specific and Specialized

Industries such as healthcare, law, education, and finance will see the rise of domain-focused LLMs trained on verified, high-quality data relevant to their fields.

These specialized models will deliver greater precision, compliance, and contextual accuracy, reducing errors and improving reliability in high-stakes environments.

For instance, a medical LLM might assist in diagnostics and research, while a legal one could summarize contracts or analyze case law with built-in ethical safeguards.

4. Self-Improving and Continuously Learning

- Traditional LLMs are static—they stop learning once training ends. Future versions will be self-improving, capable of updating their knowledge base through reinforcement learning and live feedback loops.

- This adaptability ensures that models stay current with new information, regulations, and language trends without needing to be retrained from scratch, creating a dynamic and continuously evolving AI ecosystem.

5. Ethically Governed and Responsible

- As AI adoption grows, global attention is turning toward responsible and transparent development.

- Future LLMs will be built under stricter ethical frameworks focusing on fairness, data protection, and bias mitigation.

- Governments, research institutions, and tech companies are collaborating to create standards that ensure LLMs serve humanity safely and ethically—balancing innovation with accountability.

FAQs

1. What makes an LLM different from traditional AI?

Traditional AI models are task-specific, while LLMs are general-purpose—capable of adapting to a wide range of language-based tasks.

2. How large are Large Language Models?

Models like GPT-4 or Gemini use hundreds of billions to trillions of parameters, trained on terabytes of data.

3. What is the difference between pre-training and fine-tuning an LLM?

These are two distinct phases in the training pipeline.

- Pre-training: This is the initial, large-scale, and unsupervised phase. The model is trained on a massive, diverse dataset (e.g., the entire internet) to develop a broad, foundational understanding of language, grammar, and general knowledge. This phase is computationally expensive and takes weeks or months.

- Fine-tuning: This is the subsequent, smaller-scale, and supervised phase. A pre-trained model is further trained on a smaller, high-quality, and task-specific dataset (e.g., customer support transcripts or legal documents). The goal is to specialize the model for a particular use case, adapting its behavior to a specific domain or task. Fine-tuning is far more resource-efficient than pre-training and can be completed in hours or days.

4. What is an LLM “parameter” and why do they have so many?

In the context of LLMs, parameters are the values that the model learns and adjusts during its training process. The primary types of parameters are weights and biases within the neural network. A weight is a numerical value that determines the importance of a connection between neurons, while a bias is a constant value that helps a neuron activate.

LLMs have billions, or even trillions, of parameters because this massive scale is what allows them to capture the immense complexity of human language. Each parameter contributes to the model’s ability to recognize patterns, understand context, and generate coherent text. The sheer number of parameters is directly correlated with the model’s capacity to store knowledge and perform a wide range of tasks.

5. What is the “temperature” parameter in LLM text generation?

The temperature parameter is a setting that controls the randomness and creativity of an LLM’s output. It acts as a “randomness dial” that influences the model’s token selection process.

- Low Temperature (e.g., 0.2): Makes the model’s output more deterministic and predictable. It will consistently choose the most probable next token. This is ideal for tasks requiring factual, consistent, or conservative answers, such as summarization or code generation.

- High Temperature (e.g., 0.8): Introduces more randomness into the token selection, allowing the model to choose less probable tokens. This results in more diverse, creative, and sometimes unexpected outputs. This is often used for creative writing, brainstorming, or generating conversational dialogue.

Conclusion: Embracing the LLM Revolution

Large Language Models represent a monumental leap in how machines understand and interact with humans. They blur the lines between technology and conversation, making AI more accessible, powerful, and human-like than ever before.

For businesses, LLMs are more than just tools—they’re catalysts for transformation. Whether automating customer support, generating content, or turning data into insight, the organizations that harness these models wisely will define the next era of digital innovation.